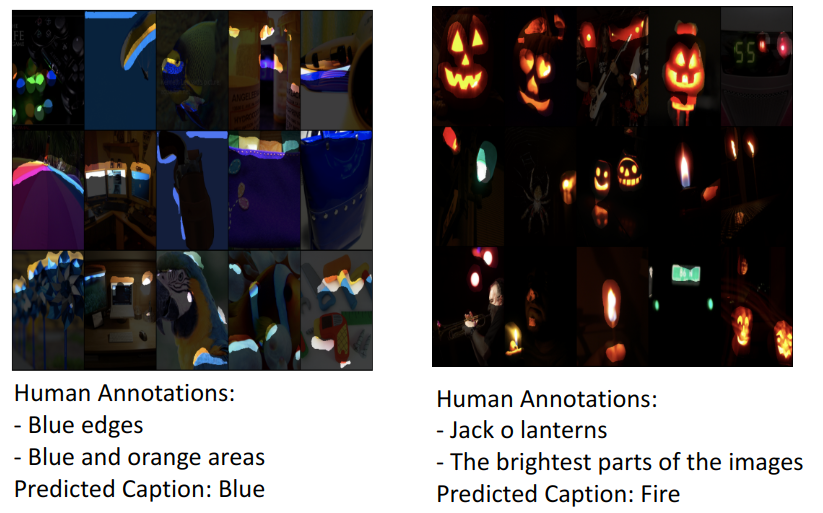

Interpreting Vision Models

I performed research as part of MIT Summer Research Program on interpretable machine learning working on improving MIT’s MILAN - a model that annotates neurons. The project focused on describing the behaviors of individual neurons. MILAN takes the top 15 activating image regions that a neuron analyzes and generates compositional descriptions that describe the patterns among these 15 image regions. Even though this model works well, it requires too much training data to be useful for other applications. My research addressed this problem by creating a new implementation of MILAN, which consisted of a model that generated one-word annotations of neurons using no data with the Contrastive Language Image Pre-training (CLIP) model. At the end of the summer research program, I presented my work in a poster presentation and a lightning talk at the MIT summer research conference. I was asked to continue this research project with MIT through the fall of 2022 so that I may start to work on creating multi-word compositional descriptions and better descriptions overall for the abstract ideas.